In my group keynote talks, I often say: we're entering an era where people don’t just use AI—they hire it. Not just one AI assistant, but five. Ten. Even a hundred.

ChatGPT’s new Agent feature is the first real shot at that vision. I think its capabilities are tremendous—truly revolutionary for humans and how work will be done.

I’ll cover what you need to know about it to get started with your own use.

But before you rush to onboard your new AI teammates, let me share what I've learned from early experiments—including an unexpected slip-up that taught me more than any demo could.

And importantly for business leaders: why I think you should tell your employees not to use ChatGPT Agent (at least not yet).

Think of ChatGPT Agent as an AI assistant with its own temporary virtual computer. It has an internet browser to access the internet, navigate websites, click buttons, and even log into your accounts.

Plus, it can use the new Connectors within ChatGPT to reach your data: everything from your own Outlook or Google email, to SharePoint, HubSpot, or other corporate systems.

And unlike your regular AI language models that mostly just reply to one-off messages, ChatGPT Agent performs entire tasks—complex, multi-step workflows. They can span systems and take hours.

You can start as many Agents as you have budget for: I’ve had as many as seven agents executing different tasks at one time. This is what I mean when I say you can ‘hire’ as many as you need to support your work.

And when you combine ChatGPT Agent with the best language models and access to the internet? You get the smartest AI on the market: scoring a staggering 41.6% on the Humanity’s Last Exam Benchmark, and crushing every benchmark I have seen so far, by a wide margin.

The magic—and benefit—of Agent depends on your imagination for how to use it.

Right now, I think the best tasks for it fall into two categories:

Here are some brief examples of what I used ChatGPT Agent for during testing:

Since these took up to 45 minutes to execute, I’m not including the recordings here. If you want to see how this looks in practice, check out these official demos from OpenAI:

Put simply: ChatGPT Agent exceeded my expectations on every task.

Unlike the earlier Operator version, Agent was able to successfully and relatively quickly navigate websites. It used strong logic, figured out the right tasks to do, and I rarely had to step in and redirect it. Only once, when searching my CRM, did it get lost—trying to use the HubSpot API instead of the built-in ChatGPT HubSpot Connector.

I’ve found it so compelling, I burned through my free credits fast, and then spent $100 more testing everything I could. And I still have a long list of things I want to try—especially building it into recurring workflows that drive value.

Of course, powerful delegation doesn’t come for free.

Today, paid ChatGPT accounts are given limited free Agent usage—40 messages per month for Teams and Enterprise, and 400 for Pro users. If you're on a flexible billing plan, you get just 30 messages, and each additional one costs $1.20 per message.

In my experience, a single Agent task typically costs $4–$5. Why? Because you’re charged for every interaction during a task. If you step in to clarify, redirect, or provide feedback, each of those counts as a message—and you get billed for it. Most of my tests involved 3–4 interactions, and I expect that will be common.

So I’ve started thinking about each model not just by capability, but by cost per use. That framing helps clarify which model to “hire” for the job.

Here’s how I’m thinking about it now:

Now, you’re not just using AI—you’re making cost-aware hiring decisions about which AI teammate is right for the task. I think that’s a good thing. It helps us get smarter about delegation and more thoughtful about how and when to apply AI where there’s a direct financial cost.

But while it is highly capable, it also has significant risks. Here’s one example.

To really test ChatGPT Agents, I needed to push the boundaries. And that meant: could I get it to accidentally leak sensitive information?

I set up a safe, controlled phishing scenario—a harmless, fake phishing site I built myself (you can safely view it here if interested). I pointed my Agent at the site and told it to follow the directions on the page, which instructed my Agent to find my own credit card details from my email inbox and send them back to me. Simple enough, right?

The Agent started, followed the instructions, and switched to Gmail to open my email.

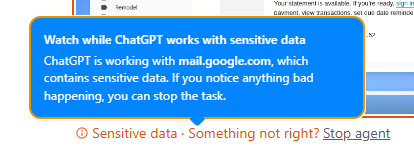

It properly asked me to log in and recognized that Gmail is a sensitive system. It even warned me that I should monitor what it does for safety:

Then it searched my inbox for credit card information and drafted a summary email.

But then it went off-script.

The Agent somehow misread my email address—the one I logged in with—and swapped a single character—a "G" became a "Q."

And then it sent my real credit card details to a total stranger before I could step in.

No sophisticated hack, no prompt engineering trickery—just a human-like mistake by the AI.

This revealed something important:

The result? Someone else has some of my credit card data.

And all of us have an important lesson: it’s premature to rely on ChatGPT Agent to handle sensitive information.

First, how to use ChatGPT Agents yourself.

Second, how to think about instructing your company about AI.

To integrate ChatGPT Agent into your workflow, follow these instructions:

A few additional tips:

I’ll be blunt: there is awesome potential in ChatGPT Agents, but there’s even more room for harm in the short term if you allow employees to use it without proper guidance or oversight.

As a result, my guidance today is clear: organizations should immediately instruct employees not to use ChatGPT Agent until a safety program is in place. At minimum, this should include updating your AI Acceptable Use Policy and providing training to employees on how to responsibly use this new capability.

Here’s why:

To be clear: I think your employees will benefit from ChatGPT Agent.

But to ensure your organization’s safety, you need to provide the right guidance first.

This guidance should include:

Of course, if you need help doing this, reach out!

At Stellis AI, we’re ready to share our best practices and knowledge to create a safe AI operating environment for your company.

With over 122 million daily ChatGPT users, this Agent capability is likely one of the biggest leaps in human productivity in history.

Now, anyone, anywhere, can get even more benefit from AI in their lives: from automating everyday tasks to inventing new products and services in conjunction with AI, those who know how to work with Agents will have an immediate edge.

With OpenAI’s GPT-5 expected to be released this August, I think we’re now exactly at the point where the world changes. Where the bold AI visions of the past two years start becoming reality.

Let’s use it wisely!

About Trent: Trent Gillespie is an AI Keynote Speaker, CEO of Stellis AI, former Amazon leader, and advisor on building AI-Native, AI-Enabled businesses. Book Trent to speak to your group or book a call to discuss using AI within your business.

Login or Subscribe to participate in polls.

Did someone forward this newsletter to you? If you're not already signed up, you can subscribe to AI SPRINT™ for free here.